Uber.com Performance

A year long performance audit and refactor of uber.com

Summary

Uber.com is like no website I’ve ever worked on in my past. It touched every line of business at Uber, it had over 4 million pages, and was translated in 50 languages. The start of this project was difficult for me on so many levels. In my previous experiences, I’ve always worked on behalf of an agency and this is important because agencies rarely get the opportunity to work on higher level projects like web performance. So when being tasked to focus on the performance for uber.com, I started extremely lost on what was expected of me. Over the next year, I set and iterated on a few goals that led me to success.

In this writeup we’ll be covering three major topics:

All that went into to running and maintaining uber.com. My larger contributions to uber.com. How my contributions lead me to being successful at uber.

Who is Uber.com?

If you feel like the dumbest person in the room then you're in the correct room. Below showcases the engineering team of Uber.com.

What is Uber.com?

As you probably would guess, uber.com is a website... a website that serves as the nexus to educate, promote, and sell services for all lines of business at Uber.

It's also a website with 0 pages!

and a website with 0 lines of business

- Jump

Work

Drive

Restaurants

Ride

Uber

Business

Elevate

Money

Freight

Built by 0 authors world wide

Translated in over 0 languages

Technology & Architecture

Uber.com technology is seriously the largest bowl of buzzword soup I’ve had the pleasure to eat. In respect to time, I won’t be able to explain the entirety of the Architecture but only feed you small spoonfuls.

🥞 Stack

It’s a tall stack of pancakes here. I encourage you to take your time and familiarize yourself with diagram, and the technologies that uber.com is built upon.

Programming Languages

Golang

- Microservices

- Database

- HTTP / RPC

NodeJS

- All Purpose Scripting

- Server

JSX/HTML/CSS

- Templating & Styling

Javascript

- Everything

Golang

- Microservices

- Database

- HTTP / RPC

NodeJS

- All Purpose Scripting

- Server

JSX/HTML/CSS

- Templating & Styling

Javascript

- Everything

Framework & Libraries

Fusion.js

- Isomorphic Koa

- Deps Injection

- SSR

React

- UI

- State Manager

Styletron

- CSS-in-JS

- Atomic CSS

Fusion.js

- Isomorphic Koa

- Deps Injection

- SSR

React

- UI

- State Manager

Styletron

- CSS-in-JS

- Atomic CSS

Internal Services

CMS

- Block CMS

- i18n Integration

- JSON Schema

Site

- Marketing Site

- Webpack

Components

- Storybook

- Jest

- Lerna

CMS

- Block CMS

- i18n Integration

- JSON Schema

Site

- Marketing Site

- Webpack

Components

- Storybook

- Jest

- Lerna

3rd Party Services

Optimizely

- A/B Testing

GCP

- Hosting

- Edge Caching

Cloudinary

- Image Optimization

Optimizely

- A/B Testing

GCP

- Hosting

- Edge Caching

Cloudinary

- Image Optimization

Architecture

In respect of time, the architecture below focuses on the front-end. These three repositories come together to build the front-end of uber.com.

Components

CMS

Sites

Page Renderer

There are many layers and/or boundries to this system. Data flows from CMS down through to the Page. The diagram below describes this data flow through these layers and/or boundries at a high level.

The Block System

Uber.com was made of many small components. We composed together into what we called “Blocks”. Blocks are simply a composition of components and a Schema file. Below is a deep dive into the more than 160 Blocks that make up uber.com.

Component Architecture

There are Three major layers to the component architecture. Page Components, Block components, and Element Components.

Page

Block

Elements

Shared Components

With over 160+ components, Uber.com was flexible enough for Authors to author nearly every part of a page. This included the Navigation system which was shared acrossed all lines of business.

- Jump

- Work

- Uber

- Business

- Elevate

- Eats

- Drive

- Money

- Ride

- Freight

Dynamic Blocks

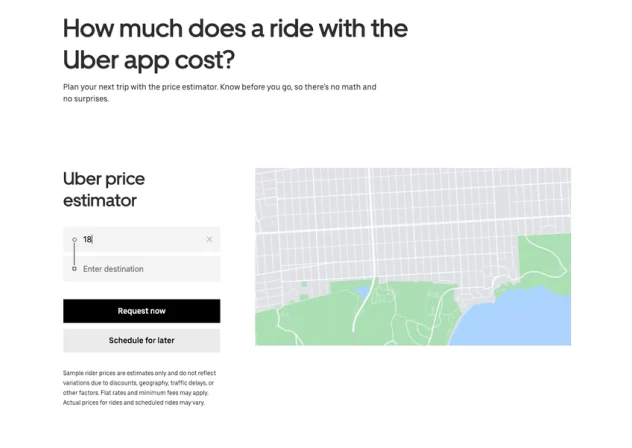

Sometimes a Block’s data wouldn’t come from authors but rather from another 3rd party service like Google Places or application like Ubereats. Below shows a few example’s of Uber.com’s dynamic blocks.

Storybook

Storybook is a tool for UI development. It makes development faster and easier by isolating components. This allows you to work on one component at a time.

Block Schemas

Every block comes with a Schema. This Schema is a map of types. These types can be primative like: string or number or more complex like Array or DataTime. A Schema types are used to define the shape of Block. Also Schema types are used to create the form need to author a Block. We’ll talk more about the authoring experince but first let’s look at an example: Button Schema.

Content Management System

With over 2000+ Authors World Wide, Uber needs a tool to manage content. Chameleon UI was this tool.

Authoring Experience

Lorem ipsum dolor sit amet, consectetur adipiscing elit.

Internationalization

Being a global company Uber.com had a very tight workflow for tranlsations. Translations were kicked off through Chameleon and within a week or so a page could be translated in over 50 languages.

Shipping Code

Updating uber.com was pretty tedious. It required 3 sequential code reviews across the three respositories: components, cms, sites. Landing code on to master ran through continuous integration to run unit tests, type checking and linting.

Visual Regression Testing

With 160+ components, 50+ lanaguages, and 4 million pages, manual regression testing is essentially impossible. To get some kind of confidence that we weren’t breaking the site, before deploying to production, we took 100s of core pages and would run them through visual regression testing. The test would generate a report where we could see if anything looked out of the ordinary. Below demonstrates how we shipped RTL orientation of our homepage by accident

main

dev

Diff Output

Automated E2E Testing

We had core functionality that visual regression would be otherwise unable to pickup. We would run e2e testing using puppeteer across these major features to ensure that we didn’t ship broken code to production.

Monitoring

Uber.com would monitor the health of the client and server in real time. With tools like Graphana and client/server metrics, developer’s could get realtime notification on if our front-end or backend was failing.

Optimizing

A base FusionJS app is roughly 60kbs and our app was a whooping 1.2mbs this gave me a the confidence to set a pretty straightforward goal:

- 50% reduction in JS Bundle

The bundle size of uber.com kept growing deployment after deployment. Before my first optimization our bundle had reached 1.2mbs! At the time, I knew that I was no performance engineer and 50% bundle reduction was an aggressive goal. That said, I’ve never in my life worked on a marketing website that had such an enormous bundle size.

Bundling, transpiling, & module systems.

The module systems inside of javascript are a nightmare, There are too many standards, none of them play well with another and they all have pros and cons. That said there is a clear winner in terms of performance and that is the Ecmascript module or ESM for short.

Uber.com is a fusion application that is bundled javascript into small chunks using webpack. Components are simply transpiled using babel. The key for the best optimization when feeding a library that’s transpiled into a service that will be bundled using webpack is to preserve the esm or import syntax. Problem here is that ESM isn’t (until super recently) supported in nodeJS. So for everything to play nicely between dev and production, we needed transpile both CJS and a ESM from the Component Monorepo.

There tons of other small gotchas inside the package.json like adding the module type and side effect: false. That said, when properly exporting esm modules our javascript bundle inside uber.com went down 30% because components could now be properly treeshaken!

Image Processing with Lazy loading

Uber.com is a media rich website; moreover, all these images and videos are managed by our cms and are added via authors around the world. Authors that don’t really have any knowledge of what is an optimized image for the web. Not trying to point the finger at authors, Image Optimization is a complicated topic and for anyone interested to learn more, I’d point you to Addy Osmani’s 2019 book Essential Image Optimizations.

STEP 1

Our first optimization was to leverage a service Cloudinary. Cloudinary has some killer features that can make image optimization a breeze. Cloudinary is a file storage cdn with a graphic service layer in front. I like to describe it as “Photoshop in the cloud!” Let’s take this first example image on the current homepage of uber.com.

https://res.cloudinary.com/morningharwood/image/upload/v1599696271/uber/eats.jpg

STEP 2

Each field above could be improved. Starting with dimensions, imagine that the user is viewing the website from a WXGA (1366x768) resolution, our image’s dimension would be unnecessarily large. Cloudinary offers width and height flags that you pass in the img src url.

https://res.cloudinary.com/morningharwood/image/upload/v1599696271/uber/eats.jpg

STEP 3

A Jpg file type is pretty optimized relative to png and gif; however, webp is a well supported image format that’s compression is superior to jpg.

https://res.cloudinary.com/morningharwood/image/upload/f_auto,h_768/v1599696271/uber/eats.jpg

STEP 4

Well so far so good, but we can do better! Let’s talk about image quality/compression. Inside cloudinary there are tons of things you can do. The example to the left makes quality extremely low and therefore you can see the artificating. however, best and simplest is a flag `q_auto:eco`.

https://res.cloudinary.com/morningharwood/image/upload/f_auto,h_768,q_auto:eco/v1599696271/uber/eats.jpg https://codesandbox.io/s/cloudinary-image-quality-orh0s?file=/src/App.js

STEP 5

Finally using Intersection Observer I was able to decrese initial page download by 40% by lazyloading images that exsisted below the users viewport.

Networking

Full HTTP/2 Support

All of our JS and images were served on cloudfront edge over HTTP2. That said there were dozens of resources still coming from origin that were HTTP1.1. I admittedly didn’t do this work; however, I had to work with Infra at Uber for over a year to get this deployed into production. It is difficult to measure the performance here but statistics say that there can be up to a 30% decrease in network latency going fromHTTP1.1 to HTTP2.

L2 Edge Caching

Uber.com has over 4 million pages and to build these pages it relys on dozens of 3rd party services for content, localization, and geolocation etc. Each one of these at any time could go down and potentially take down pages of our website. GCP caching is amazing because it will cache responses from origin and put them on the edge. That said implementing GCP caching comes with a bunch of gotchas. The biggest of which is that your server cannot use Set-Cookie in the response headers otherwise GCP will ignore it as a cache key. I implemented GCP caching on www.uber.com/airports. GCP caching reduced our Time to first Byte (TTFB) by 1.2s.

Results

For nearly six months I focused most of my effort on performance of uber.com. In then end, I was able to exceed my own expectations!

- 50% reduction in Page Download

- 50% reduction in JS Bundle

- 50% reduction in TTFB

- 50% reduction in TTI

Retrospect

For nearly 1 year, I focused most of my effort on performance of uber.com. In then end, I was able to exceed my own expectations! By far, uber.com was the most influencial project in my career. For various reasons (looking at you covid), 2020 marked an end of an era. The uber.com team was shuffled and I moved on to tech lead a team of engineers on Uber Eats Web.